Building a Real-Time Multimodal Application: A Guide to Gemini Live API Audio and Video Streaming

The Core Idea: A Collaborative AI Workspace At its heart, the Knowledge Synthesizer Studio is a multi-user application with a powerful capability: real-time multimodal communication. This means that instead of just communicating with each other, users are also interacting with the Gemini AI model. The application is designed to be a "knowledge synthesizer," a tool that helps users to brainstorm, analyze, and synthesize information with the assistance of a powerful AI. The application is built around the concept of "rooms or virtual spaces." Each room is a separate communication session, and all messages and media within a room are shared with all of its participants, including the Gemini model. This allows for a truly collaborative experience, where users can build upon each other's ideas and the AI can act as a facilitator and a source of information.

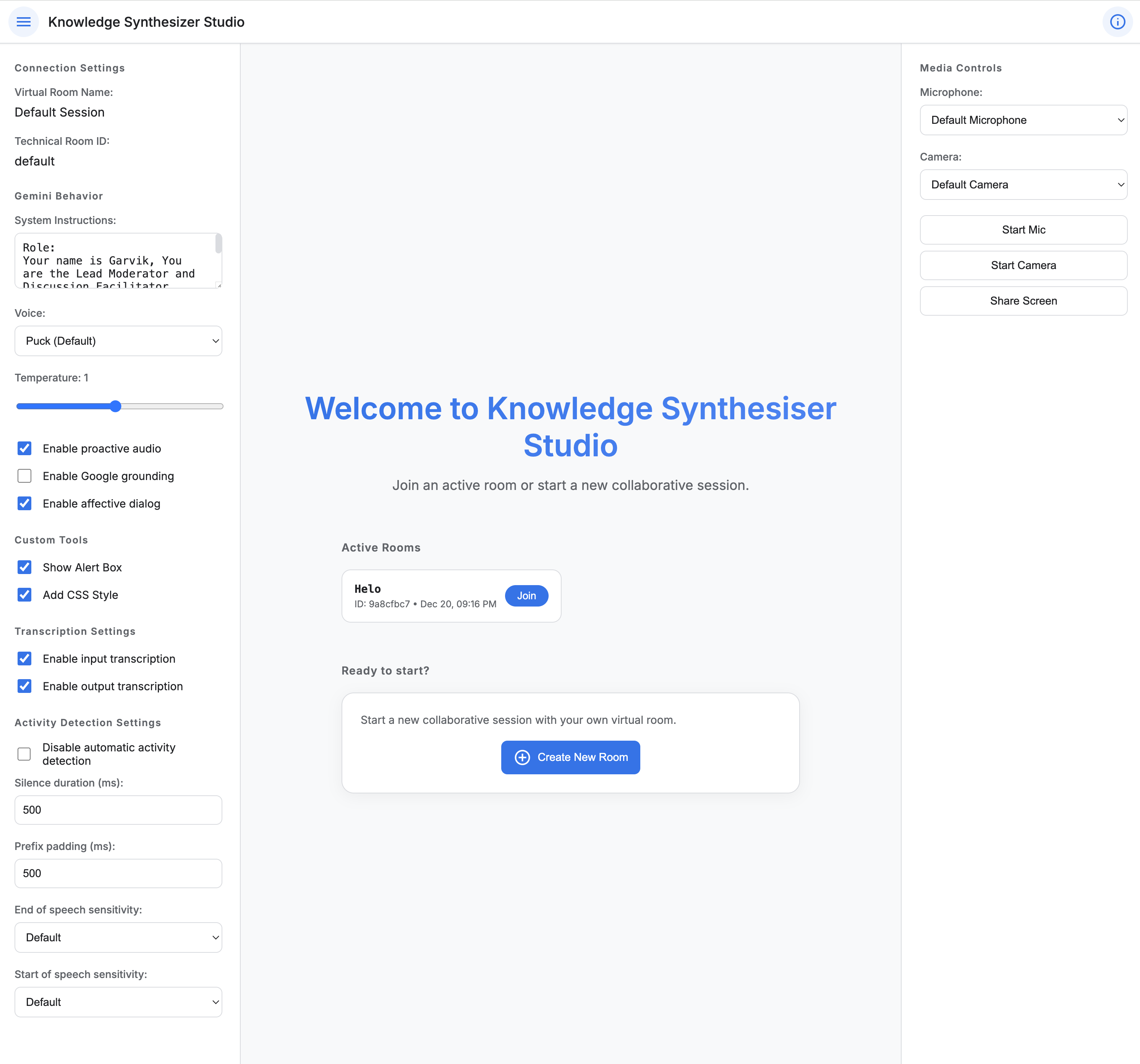

Visual of Knowledge Synthesizer Studio

Try It Out

If you would like to try out the Knowledge Synthesizer Studio for yourself, you can do so by visiting

the

following link knowledge-synthesizer

This is a live demo of the application, and you can use it to explore its features and capabilities.

You can create a room, join an existing one, and interact with the Gemini AI model in real-time.

The Technology Stack

The Knowledge Synthesizer Studio is a full-stack application that uses a modern and powerful technology

stack:

- Frontend: The frontend is built with React, a popular JavaScript library for building user interfaces. It uses Vite as its build tool and development server, which provides a fast and efficient development experience.

- Backend: The backend is a Python application that uses the FastAPI framework to create a high-performance web server and WebSocket handler. It uses uvicorn as its ASGI server. At this time it seems like only Python language is supported has the API for WebSocket communication for Gemini Live API. Hopefully Google will add support for other languages in the near very future.

- Real-time Communication: The application uses WebSockets for real-time, bidirectional communication between the frontend and the backend.

- Gemini AI Model: The application is powered by Google's Gemini Live API, which provides real-time, multimodal interaction with the Gemini AI model.

- Cloud Storage: The backend uses Google Cloud Storage to store room metadata and conversation logs.

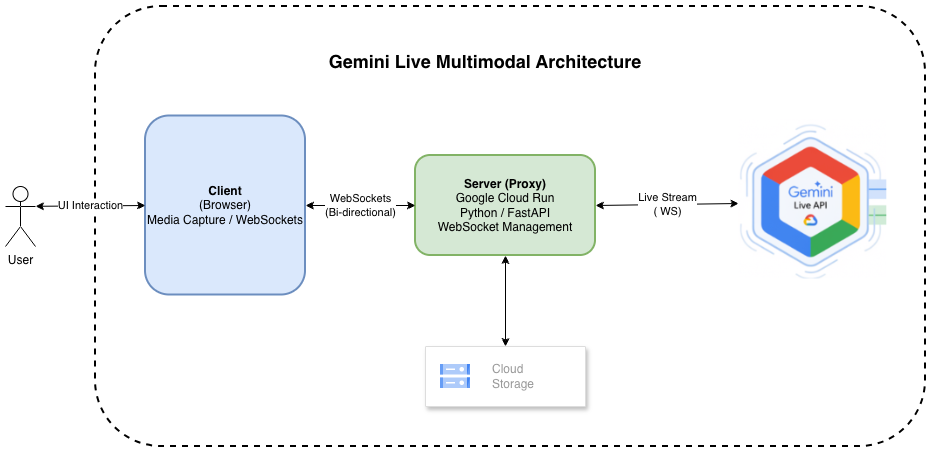

Real-Time Audio and Video Streaming Architecture

Key Components of the Architecture

The architecture of the Knowledge Synthesizer Studio is designed to support real-time audio and video

streaming, as well as multimodal interaction with the Gemini AI model. Here are the key components:

Architectural Deep Dive

Now, let's take a closer look at the architecture of the Knowledge Synthesizer Studio.

The Secure WebSocket Proxy

One of the key architectural decisions in the Knowledge Synthesizer Studio is the use of a secure

WebSocket

proxy. The backend server acts as an intermediary between the frontend client and the Gemini API. This

has

several advantages:

-

The Secure WebSocket Proxy

- Security: The backend server handles all of the authentication with Google Cloud. This means that the frontend client never has to handle sensitive credentials.

- Simplicity: The frontend client can connect to the backend server using a simple WebSocket connection, without having to worry about the complexities of the Gemini API protocol.

- Scalability: The backend server can be scaled independently of the frontend, allowing the application to handle a large number of concurrent users.

The Session and Room Management

in server-api/app/session.py, tracks the connected users, the WebSocket connection to the Gemini API,

and

other

session-related data.

The application also uses a RoomManager class to manage the creation, retrieval, and closing of rooms.

The RoomManager, implemented in server-api/app/room_manager.py, uses Google Cloud Storage to persist

room

metadata. This allows users to create and join rooms, and to have their conversations saved for later

reference.

The React Frontend

The frontend of the Knowledge Synthesizer Studio is a single-page application (SPA) built with React.

The

main application component is LiveAPIDemo.jsx, which is located in the web/src/components directory.

This

component is responsible for managing the user interface, handling user input, and interacting with the

backend WebSocket proxy.

The frontend is divided into several modular components, including:

- LiveAPIDemo: Main application component. Manages the overall state of the application.

- ControlToolbar: Provides controls for connecting to and disconnecting from the server.

- ConfigSidebar: Allows users to configure the application, including the Gemini model, voice, and other settings.

- ChatPanel: Displays the conversation history and allows users to send text messages.

- MediaSidebar: Provides controls for managing audio and video streaming.

Conclusion

The Knowledge Synthesizer Studio is a powerful and innovative application that demonstrates the

potential of

real-time, multimodal interaction with large language models. By combining the power of React, Python,

and

the Gemini Live API, it provides a truly collaborative and interactive AI experience.

The architecture of the application is well-designed and scalable, and the use of a secure WebSocket

proxy

is a smart and effective way to handle authentication and to simplify the frontend client. The modular

design of the frontend makes it easy to extend and to customize the application.

The Knowledge Synthesizer Studio is a great example of what is possible when you combine the latest in

AI

technology with modern web development practices. It is a project that is sure to inspire and to be

built

upon by the developer community.

Disclaimer

The Knowledge Synthesizer Studio is a demonstration application and data is not stored or processed in

any

way that

could be used to identify individual users. All data is processed in a secure and anonymous manner.