From 'AI-Ready' to 'AI-Native'

The transition from being 'AI-Ready' to 'AI-Native' represents a significant leap in how businesses leverage artificial intelligence. It's a move from simply having data and infrastructure capable of supporting AI to building systems where AI is a core, integrated component. This evolution requires modernizing both application and data layers, designing them from the ground up to be optimized for machine learning. This ensures that AI doesn't just supplement processes but drives them, leading to more intelligent, efficient, and predictive operations.

From a consumer's point of view, this marks a fundamental shift in digital interaction. Users are increasingly moving away from traditional portals that require manual navigation and clicks. Instead, they are embracing conversational interfaces as the future of digital engagement. This new paradigm allows users to interact with systems in a more natural, intuitive, and efficient way, simply by expressing their needs in plain language.

Augmenting APIs for Machine Learning Consumption

Traditionally, APIs were built to support digital applications, responding directly to user interactions. For example, a 'Get Member Details' API would be called when a user clicks their profile, fetching just enough information (like name and ID) to display on the screen. This is an 'AI-Ready' approach. To become 'AI-Native', these APIs must be augmented for consumption by AI and machine learning models. The same 'Get Member Details' API might now need to provide a much richer dataset for an AI agent—such as the member's full history, preferences, and location—to predict their needs or recommend a new service. This shift requires APIs to be more than just data-fetching tools; they must provide the deep, contextual data that machine learning models need to function intelligently. By augmenting APIs for ML, an organization enables its systems to be truly driven by AI, not just supplemented by it.

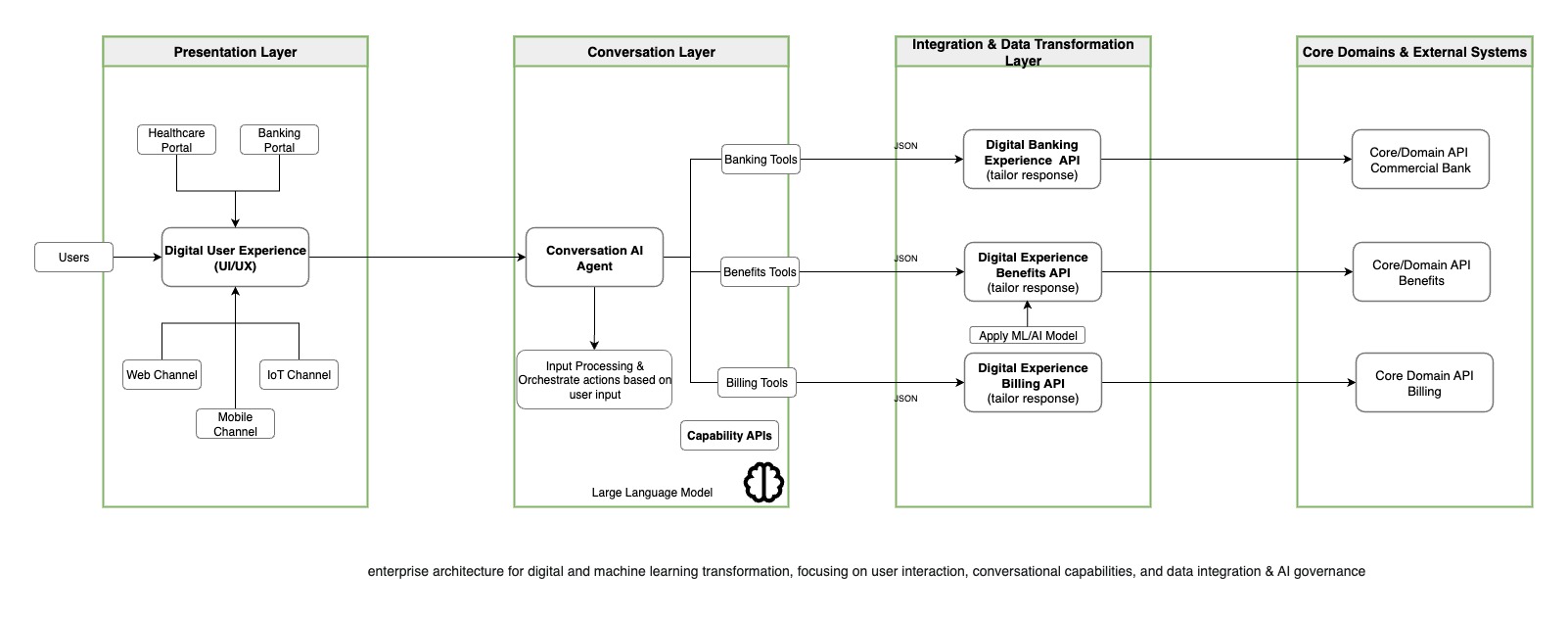

I want to highlight the layers of the AI Agent Pattern architecture that are impacted by this shift: the presentation layer where UI/UX would be built would integreate with Conversation AI Agent layers which is fundamentally responsibel for interpreting user inputs, extracting the key entity information and invoking the right subtool/agents for fulfilling those requests. The Conversation AI Agent layer would then interact with the API layer which is augmented for ML consumption. This API layer would then interact with the data layer which is optimized for ML workloads.

Presentation Layer

This layer encompasses all user-facing interfaces.

- User Interfaces (UI/UX): Central hub for user interaction, fed by various access channels.

- Access Channels:

- Digitals Access Portal: A dedicated web application for your users

- Mobile: Native mobile applications as a user interface.

- Web: Browser-based applications as a user interface.

- IoT Devices: Integration with connected devices for interaction or data capture such as Watches/Devices.

Conversation Layer

This is the intelligence and orchestration hub for user interactions.

- Conversation Agents / Orchestrator: The agent understands user requests in everyday language. It identifies and extracts key information, such as locations, dates, or medical reasons. Once it has the necessary details, the agent uses its tools to complete the task. Finally, it translates the raw data into a clear, grammatically correct, and easy-to-understand response.

- Capability APIs: These expose core business functions/capabilities to the

Conversation Layer.

- Banking Capability API: Manages consumer banking related inquiries and functionalities.

- Benefits Capability API: Responsible for handling any of the health benefits related to inquiries and actions.

- Scheduling Capability API: Manages scheduling-related tasks.

- Member ID Card Capability API: Manages member identity card inquiries and functionalities.

API Transformation / Data Integration Layer

This layer is responsible for translating and enriching data between the Conversation Layer and backend systems.

- Experience API Layer: Acts as a gateway, tailoring responses based on the consuming platform, consumer context, or desired format (e.g., JSON,XML, etc). This layer often applies machine learning models for personalization, recommendations, or data enrichment. This layer also handles the data integration with the backend systems.

Source/Domain Systems (Internal/External)

The ultimate providers of data and business logic. These include internal enterprise systems (e.g., Core Banking, Billing, Claims) and external third-party services.

- Core Banking: Manages consumer banking related inquiries and functionalities.

- Billing: Manages billing-related tasks.

- Claims: Manages claim-related inquiries and functionalities.

- Scheduling: Manages scheduling-related tasks.

AI Governance and Security

As AI becomes more integrated into core business functions, robust governance and security become paramount.

- AI Governance: This establishes the framework for using AI responsibly and ethically. It includes practices like ensuring model transparency and explainability (understanding why an AI made a certain decision), monitoring for and mitigating bias, maintaining audit trails of AI actions, and ensuring compliance with industry regulations and data privacy standards.

- Security: This focuses on protecting the entire AI ecosystem. Key security measures include safeguarding AI models from being stolen or tampered with, preventing data poisoning (where malicious data is used to corrupt the model's learning process), securing the APIs that connect to the AI, and implementing strong access controls to ensure only authorized users and systems can interact with the AI agents.

Observability and Monitoring

Ensuring the health, performance, and reliability of AI agents and their underlying systems is critical. This layer focuses on comprehensive monitoring and logging across the entire AI ecosystem.

- Logging: Capturing detailed records of all interactions, decisions, and system events. This includes input prompts, AI responses, tool usage, errors, and system performance metrics. Effective logging is crucial for debugging, auditing, and understanding AI behavior.

- Monitoring: Continuously tracking key performance indicators (KPIs) and

operational

metrics. This involves:

- AI Model Performance: Monitoring accuracy, latency, throughput, and drift (how well the model maintains its performance over time with new data).

- System Health: Tracking CPU usage, memory, network activity, and API response times for all components.

- User Experience: Monitoring user satisfaction, engagement, and error rates to identify areas for improvement.

Lets start to set up the Conversation AI Agent in the next part using Google Agent SDK and Spring Boot.